|

Mason L. Wang I am an EECS PhD student at MIT CSAIL, where I am working with Professor Anna Huang. I am currently on leave, and working at xAI. I am interested in audio, generative modeling, and signal processing. I received my master's in Electrical Engineering at Stanford University working at the Stanford Vision and Learning Lab. I was advised by Jiajun Wu and Mert Pilanci. You can contact me at masonlwang32 [at] gmail [dot] com. Or, you can find me on Twitter, LinkedIn. |

|

Publications |

|

|

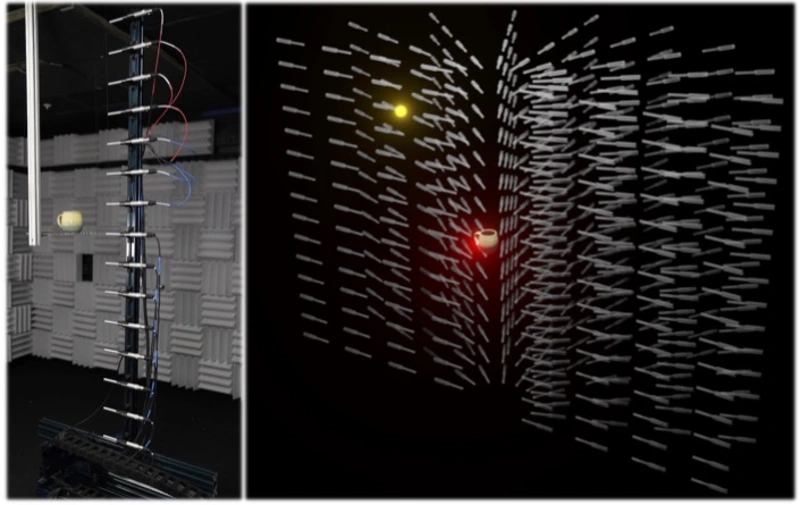

Hearing Anything Anywhere

Mason L. Wang*, Ryosuke Sawata*, Samuel Clarke, Ruohan Gao, Elliott Wu, Jiajun Wu CVPR, 2024 video / paper / website / dataset / code / arkiv We create a method of capturing real acoustic spaces from 12 RIR measurements, letting us play any audio signal in the room and listen from any location/orientation. We develop an 'audio inverse-rendering framework' that allows us to synthesize the room's acoustics (monoaural and binaural RIRs) at novel locations and create immersive auditory experiences (simulating music). |

|

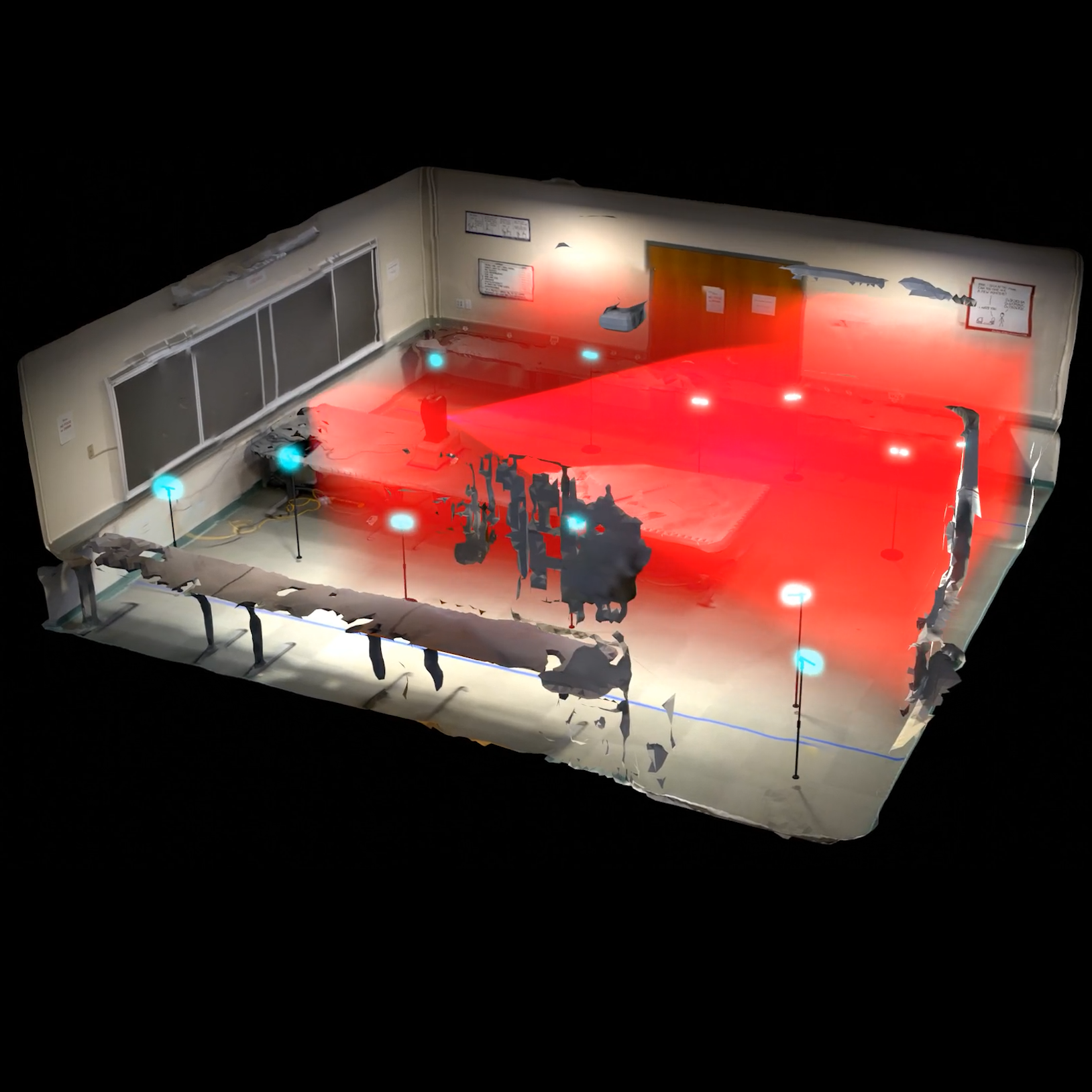

SoundCam: A Dataset for Finding Humans Using Room Acoustics

Mason L. Wang*, Samuel Clarke*, Jui-Hsien Wang, Ruohan Gao, Jiajun Wu NeurIPS Datasets and Bencmharks, 2023 project page / video / arXiv Humans induce subtle changes to the room's acoustic properties. We can observe these changes (explicitly via RIR measurement, or by playing and recording music in the room) and determine a person's location, presence, and identity. |

|

RealImpact: A Dataset of Impact Sound Fields for Real Objects

Samuel Clarke, Ruohan Gao, Mason L. Wang, Mark Rau, Julia Xu, Jui-Hsien Wang, Doug James, Jiajun Wu CVPR, 2023 (Highlight, Top 2.5% of Submissions) project page / video / arXiv Everyday objects possess distinct sonic characteristics determined by their shape and material. RealImpact is the largest dataset of object impact sounds to date, with 150,000 recordings of impact sounds from 50 objects of varying shape and material. |

|

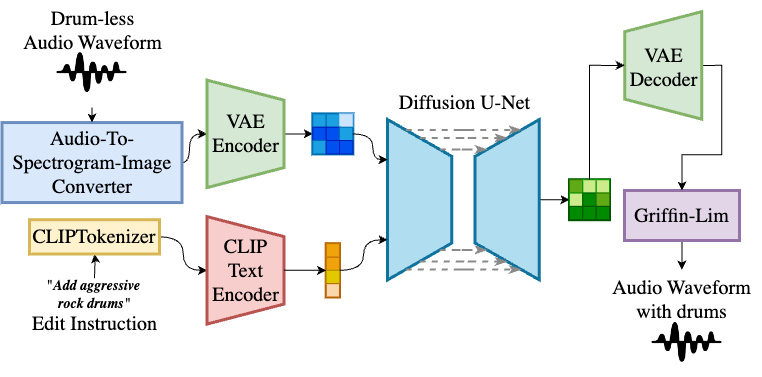

Subtractive Training for Music Stem Insertion Using Latent Diffusion

Models

Ivan Villa-Renteria*, Mason L. Wang*, Zachary Shah*, Zhe Li, Soohyun Kim, Neelesh Ramachandran, Mert Pilanci ICASSP, 2025 paper / examples We use a dataset of full-mix songs, stem-subtracted songs, and LLM-generated edit instructions to train a stem editing/insertion diffusion model. |

Education and Experience |

|

|

xAI

December 2025-?

Member of Technical Staff

Palo Alto, CA

|

|

|

MIT

August 2024-December 2025

EECS PhD Student

Cambridge, Massachusetts

|

|

SONY AI

June 2024-August 2024

Research Intern, Music Foundation Model Team

Tokyo, Japan

|

|

Stanford University

September 2022-June 2024

M.S. in Electrical Engineering, specialization

in Signal

Processing and Optimization

GPA: 4.22/4.3

Course Assistant for ENGR 108 (3x), EE 178 (1x)

Research Assistant in CS (1x), EE (1x)

|

|

The University of Chicago

October 2018-June 2022

B.S. in Computer Science with a Specialization in

Machine

Learning

B.A. in Mathematics

GPA: 4.0/4.0

Honors: Odyssey Scholar, Enrico Fermi Scholar, Robert Maynard Hutchins Scholar,

Summa Cum Laude

|

News |

|

|

9/24/25 9/17/25 12/20/24 04/13/24 02/26/24 09/21/23 02/27/23 |

First-author submission to ICLR 2026! Second-author submission to ICASSP 2026! Subtractive Training is accepted to ICASSP 2025! First-author submission to ISMIR 2024! Hearing Anything Anywhere is accepted to CVPR 2024! SoundCam is accepted to NeurIPS Datasets and Benchmarks 2023! RealImpact is accepted to CVPR 2023! |

Music Works |

|

Graph of Notes |

|

Website Template based on Jon Barron's. |